Learning Content Lifecycle Management System

- Web

- Mobile

- Desktop

This system is a modern take on learning content management, a headless Content Lifecycle Management system in which the learner-facing learning content delivery and presentation layer (the ‘head’) is decoupled from the process of creating, storing and managing the learning content (the ‘body’).

The focus of LMS is to do a great job of assigning, tracking and delivering the published learning content to learners with the best possible experience. But an LMS does not manage the phases/stages of the learning content lifecycle and the ongoing updates and changes. This is where this system —a ‘headless’ multi-tenant Content Lifecycle Management system— comes in and delivers!

This decoupled architecture lets you choose the content delivery and presentation layer (the LMS) that best suits an organization, while the LCLMS takes care of exclusively managing your content over its lifecycle. And it does that by seamlessly pairing up and complementing LMS. Any LMS.

It lets an organization take control of its learning content and assets. There is no need to dig through email attachments and folders and track people down for missing documents and lost files. Manage all of your learning content and assets from a single location, organized in the cloud. It is a cloud-based central repository of learning content.

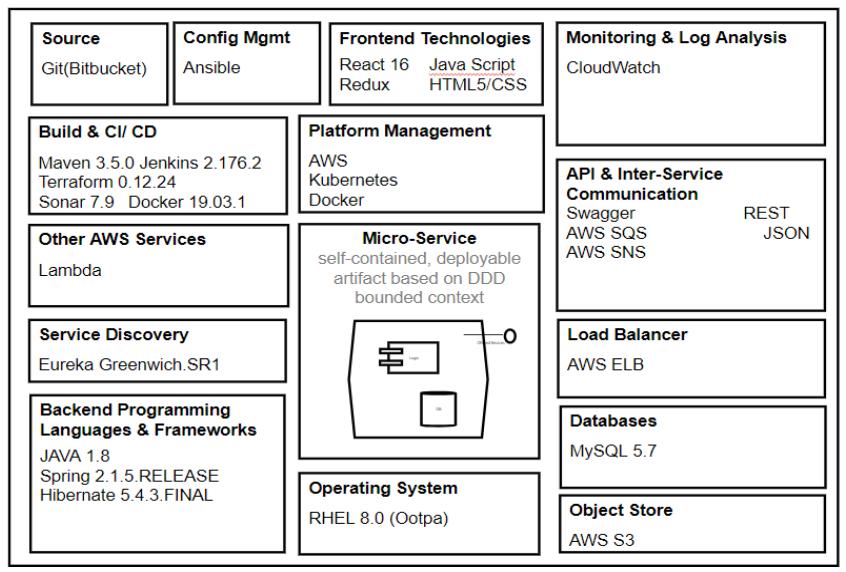

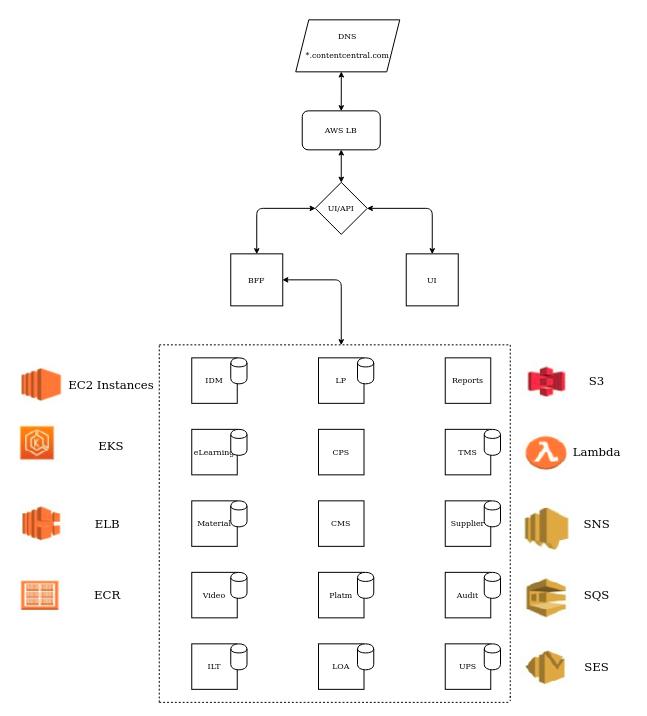

LCLMS is broken down into multiple microservices based on Domain Driven Design. Some of the key services are -

Learning Object services

Content Management and Content Processor Service

Platform Manager service

Backend for Front End

ReactJS based Front

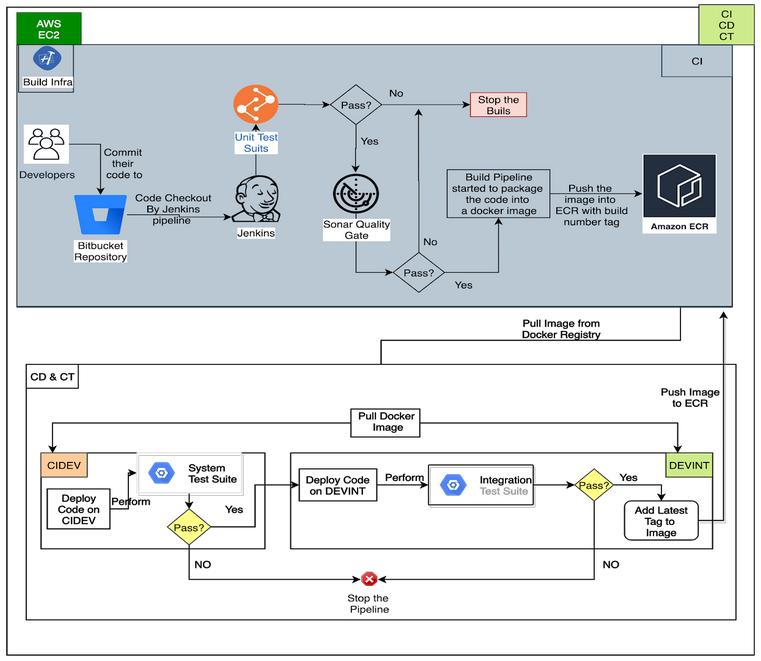

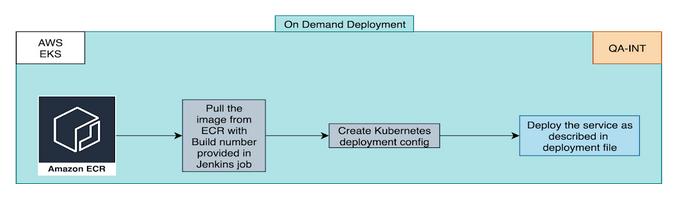

Leading open source tools like Jenkins, Terraform, Ansible, Helm and Docker were used to achieve commit based continuous delivery straight from version control system to the cloud platform deployments in high availability mode with auto scale based on load.

Based on the product road map of the tool which will automate the lifecycle of the training content. In collaboration with stakeholders, we started working on a 6 month MVP release based on Agile principles. Start of every sprint in a release, our client validated the UX and business use cases of the application.

With a small incremental sprint release cycle for 6 months, our Product Delivery Engineers developed a complex reactive and modern frontend web application integrated with microservices-based back end system.

Most important functionality that the Learning Content Lifecycle Management System (LCLMS) would provide is a repository to store content (published or otherwise) associated with the learning object. Such repository is expected to retain content for 10’s of thousands of LOs (Learning Objects) and multiple versions of each LO. Typically, a single version of LO would have to maintain multiple files of different sizes and types. Over the period of time, more LOs would be added to LCLMS increasing storage requirements of content repository. Considering such requirements, AWS S3 is chosen as the storage backend for LCLMS content repository. S3 provides virtually unlimited versioned, HA and secure storage. With its multiple storage classes support, S3 also provides the ability to optimize the overall storage consumption cost based on content access patterns. Hence this was chosen over building an on-premise content repository as all the required capabilities would need to be built from scratch.

Microservices based architecture based on domain driven design was to allow different business functions of LCLMS to evolve independently. It would also help to keep LCLMS maintainable for the long run.

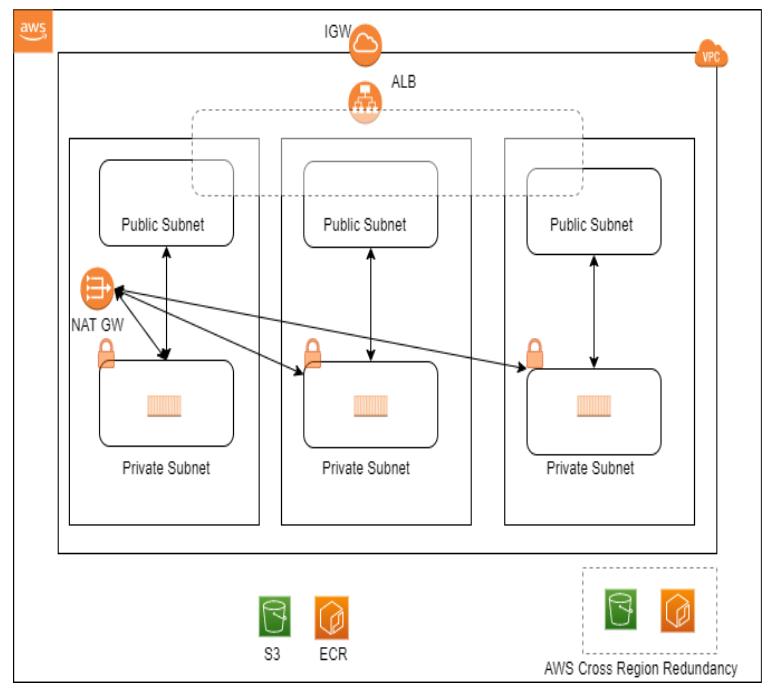

This architecture would meet the following business requirements:

Frontend web application is deployed as an independent service along with all the backend microservices in an AWS managed kubernetes environment called EKS.

Our solution let our client optimize their investment with rapid delivery of a flexible secure, cloud-based, reactive SPA application with appropriate architecture which can automatically scale, is highly available, and has a completely automated one click delivery pipeline. Finally meeting the requirements of the end customers to

Lorem ipsum dolor sit amet consectetur adipiscing elit sed do eiusmod tempor incididunt ut labore et dolore magna aliqua.

See our open positions